Electron had time. AI-native apps need something else.

Why Tauri 2.0 + Rust is becoming the default for desktop software in 2026

A decade ago, Electron felt like magic.

Write HTML, CSS, and JavaScript. Wrap it in a shell. Ship a desktop app for Windows, macOS, and Linux. No C++. No Cocoa. No Win32 misery. Web tech everywhere.

And it worked. Amazingly.

VS Code. Discord. Slack. Notion. Figma’s early desktop client. Spotify’s desktop reimagining. Electron quietly became the operating system of modern productivity.

But here’s an uncomfortable truth that most developers now recognize:

Electron solved the cross-platform UI problem for the web age.

It wasn’t designed for the AI-native age.

AI-native applications are not chat windows and Markdown editors. They are real-time multimodal systems running alongside local inference engines, vector databases, GPU pipelines, encrypted data vaults, and UIs.

That changes everything.

And this is where Tauri 2.0 stepped in – not as “Electron but smaller”, but as a different architectural answer to a different era of software.

This article explains what’s really changing in 2026, why Electron is starting to reach hard limits, where Tauri 2.0 fits in, what Rust enables that JavaScript never will, and how AI-native desktop software is being built right now.

No marketing fluff. No hype. Just real tradeoffs.

Part 1 – The Hidden Cost of Electron’s Success

The core idea of Electron is simple:

Ship Chromium + Node.js in every application.

This was genius in 2015. Browsers had matured. Web development talent exploded. UI tooling became world-class. Wrapping Chromium meant immediate cross-platform compatibility.

But every architectural shortcut comes with a price tag.

Chromium Tax

Every Electron app includes:

- The full Chromium engine

- The full Node.js runtime

- A JS-to-native IPC layer

- A sandbox layer that you either have to configure properly or not at all

The result:

Even an empty Electron window typically uses 150–300 MB of RAM in an idle state.

In 2026, that seems small – until you look at what modern AI-native applications are doing.

Local 8B–13B dimension LLM quantized in 4-bit runs:

- 4–8 GB system RAM

- 4–12 GB VRAM if GPU accelerated

Add:

- Vector database (SQLite + embeddings cache)

- Background file indexer

- Real-time transcription or vision models

- Encrypted local storage

It’s no longer “good” to have your UI shell burn 300 MB just to exist.

That’s garbage. And garbage combinations.

When users complain that “AI apps eat up memory,” UI containers are part of the problem.

Startup and Update Gravity

Electron applications are shipped in large numbers. Typical product installers in 2026:

- Medium Electron app: 120–180 MB

- Heavy apps: 200–300 MB+

That’s before model downloads.

For AI-native software shipping frequent updates, this becomes painful:

- Slow CI/CD artifact distribution

- Long user updates

- High bandwidth costs at scale

- Slow cold-start times

In isolation, these are tolerable. Together, they become a drag.

Security: A Browser with Superpowers

Electron gives you Node.js inside a browser window.

If you misconfigure context isolation or preload bridges, you’ve basically given arbitrary JS access to:

- File system

- OS APIs

- Native binaries

Most teams get this right. Many don’t. Supply-chain attacks favor Electron applications for this reason.

Electron can be secured – but it is not locked down by default. It is an opt-in security model. It is important when AI applications handle private documents, embedding of personal data, or local encrypted vaults.

CPU-bound work is not the strength of Node

Node.js shines for I/O.

AI workloads are not I/O. They are:

- Heavy CPU tensor ops (if CPU-intensive)

- GPU orchestration

- Memory-intensive data pipelines

- Parallel computation

You can bolt on native addons. You can spawn Python. You can run sidecars.

But now your “simple electron application” is a zoo of duct-taped processes.

At some point, architecture stops being magnificent.

Part 2 – What’s changed: From web-first to AI-native

This shift isn’t “Electron is bad.”

The shift is that software requirements have changed.

Web-First Era (2010–2022)

Apps were:

- Network-first

- Server-centric

- Thin local clients

- Mostly stateless

- UI-heavy, compute-light

Electron fits perfectly.

AI-Native Era (2023)

Apps are:

- Local-First

- Data-Private

- Compute-Heavy

- Model-Driven

- Multi-Threaded

- Memory-Intensive

- Security-Sensitive

This is no longer a “website in a window.”

This is a local operating environment to which the UI is attached.

Different problem. Different solution.

Part 3 – What Tauri Really Does Differently

Tauri’s main idea:

Use the OS’s native webview for rendering.

Use Rust for the backend.

Keep the UI and system layers tightly permissioned.

No bundled Chromium. No bundled Node.js. No giant always-on runtime.

Native WebView instead of Chromium

Taurie uses:

- WebView2 (Edge runtime) on Windows

- WebKit on macOS

- WebKitGTK on Linux

- System WebView on Android/iOS (2.0 in mobile)

Result:

- Zero Chromium duplication

- Smaller binaries

- Lower memory usage

- Faster startup

- Native look-and-feel integration

Typical idle memory in 2026:

- Taurie app: 30–70 MB

- Electron app: 150–400 MB

Not theoretical. Actual measurements from production applications.

Rust backend instead of Node.js

Instead of Node.js running in the renderer:

- The frontend is just a WebView

- The backend is a compiled Rust binary

- Communication via structured IPC

Rust brings:

- True multi-threading

- Predictable memory usage

- Garbage collection does not pause

- Native performance

- Strong type safety

- Zero-cost abstraction

For administrated AI-native applications:

- Model loading

- GPU resource scheduling

- Streaming inference

- Vector DB access

- Encryption

Rust is a natural fit. JavaScript doesn’t.

Capabilities-Based Security

In Tauri 2.0, the frontend cannot access anything by default.

You should explicitly allow:

- File system access

- Clipboard

- Network

- OS dialogs

- Shell commands

- Sidecars

If you don’t grant permission, it is physically impossible for frontend code to touch that resource.

This is how mobile OS security works.

Now desktop applications finally get the same model.

For AI tools handling sensitive local data, this is not a “nice to have”. It’s table stacks.

Part 4 – Tauri 2.0: The Real Leap Forward

Tauri 1.X proved the concept.

Tauri 2.0 (stable until 2025-2026) made it production-grade.

First-class mobile support

Taurie 2.0 now targets:

- Windows

- MacOS

- Linux

- iOS

- Android

The same Rust core. Same frontend codebase. Native mobile packaging.

This is important because AI assistants are now expected to run:

- On laptops

- On phones

- On tablets

- Offline

- With local predictions

It was expensive to maintain separate mobile stacks. Now they are integrated.

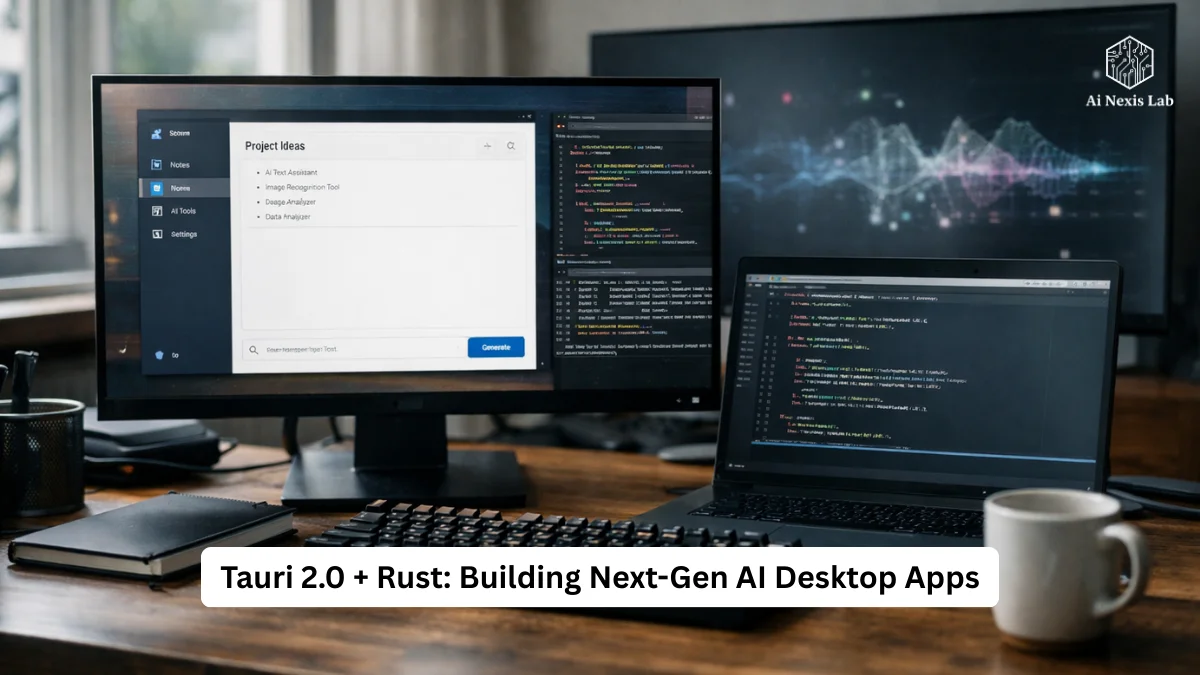

Official Sidecar Pattern

AI-native applications rarely run everything in a single process. Common patterns:

- Rust backend controlling UI and system

- Python process running on custom models

- C++ LLM runner (GGUF, llama.cpp, etc.)

- External GPU daemons

Taurie 2.0 formal secure sidecar management:

- Bundled binaries

- Controlled spawning

- IPC channels

- Permission-gated execution

No hacky child_process wrappers. No unsandboxed shell calls. Clean architecture.

Plugin Ecosystem Maturity

By 2026, Tauri plugins will exist for:

- System Tray

- Auto-Update

- Notifications

- Window Management

- File Dialogs

- Global Shortcuts

- Secure Storage

- Networking

The “Electron has more plugins” argument is no longer conclusive for most applications.

Part 5 – Real-World Metrics in 2026

Let’s leave the marketing numbers and talk about real categories.

| Metric | Electron (v33+) | Tauri 2.0 |

|---|---|---|

| Typical installer size | 120–220 MB | 3–12 MB |

| Idle RAM | 150–400 MB | 30–70 MB |

| Cold start | 1.2–3.5 s | 0.2–0.9 s |

| Backend runtime | Node.js | Rust |

| GPU/CPU orchestration | External addons | Native |

| Default security | Permissive | Locked down |

| Mobile targets | Separate stack | Native support |

| Sidecar management | Manual | Built-in |

None of this means that electrons are useless.

It just means that the performance gap is now wide enough to matter.

Part 6 – Why Rust Changes Everything for AI Applications

AI-native desktop applications live or die on the realities of system programming.

Memory Safety Without GC

AI pipelines push huge tensors through memory.

Buffer overflow, use-after-free, and race conditions are destructive.

Rust offers:

- Compile-time ownership guarantees

- No garbage collector pauses

- Predictable memory footprint

Here’s why:

- ML runtimes

- Databases

- Vector engines

- Crypto libraries

are all moving to Rust in 2026.

True parallelism

Token streaming, embedding generation, file indexing, UI updates – all at once.

Rust threads map directly to OS threads.

No event-loop bottlenecks. No blocking hacks.

FFI Without Pain

Needs to call:

- CUDA

- Metal

- DirectML

- llama.cpp

- ONNX runtime

Rust’s FFI ecosystem is mature.

It’s easy and safe to call native AI runtimes.

Ease of distribution

A compiled Rust binary is:

- Single file

- No runtime dependency hell

- Easy to cross-compile

- Fast to launch

Suitable for desktop deployment.

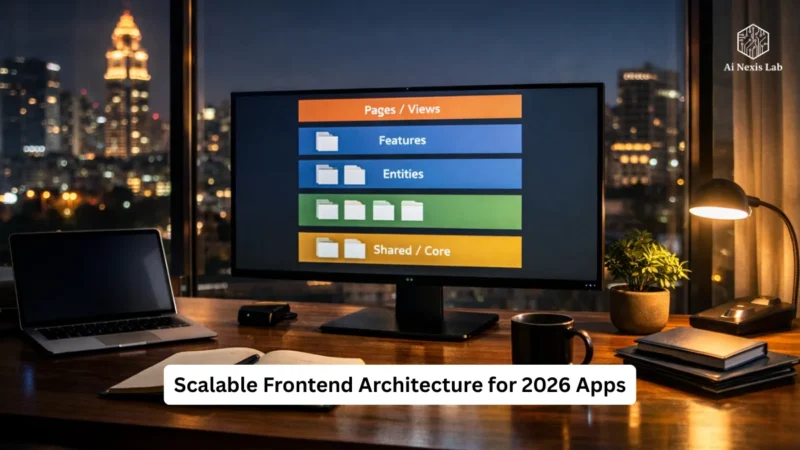

Part 7 – A Modern AI-Native Desktop Architecture

A Real 2026 Native AI Assistant Built with Tauri 2.0:

Frontend

- SvelteKit / React / Solid / Vue

- Runs in Native WebView

- Pure UI + IPC Calls

Core Backend

Handles:

- File System

- Encryption

- App State

- Window Management

- Permissions

- Model Lifecycle

AI Engine

- Option A: Rust ML Crates (Candle, Burn, Tract)

- Option B: Running Sidecar llama.cpp / vLLM / Python

- Rust-Controlled GPU Scheduling

Database

- SQLite or SurrealDB

- Vector Search Extension

- Encrypted Local Storage

Security Levels

- Capacity Rules

- Sandboxed WebView

- Restricted Sidecar

This is not theoretical.

This is how serious local AI tools are built now.

Part 8 – So… is Electron dead?

No. But it’s moving out of the fastest growing segment.

Electron still makes sense when:

- You need large-scale Node.js ecosystem reuse

- You have legacy code

- You build UI-heavy but compute-light tools

- You prioritize development speed over performance

Electron struggles when:

- Local inference is key

- Memory footprint is important

- Startup speed is important

- Security constraints are tight

- Mobile sharing is required

- Heavy native integration exists

It’s not “Electron is dead”.

It’s “Electron is no longer the default choice.”

And that’s a major change.

Part 9 – Business Perspective: Why This Matters Beyond Technology

Users now expect:

- Instant launch

- Offline AI

- No cloud dependency

- Private data

- Longer battery life

- Lower system overhead

If your app:

- Eats RAM

- Spins fans

- Lags on startup

- Downloads large updates

Users notice. And churn.

Performance is now the product.

Architecture is the business strategy.

Part 10 – The New Developer Reality

Learning Curve Reality Check:

- Electron → JavaScript Comfort Zone

- Taurie → Rust Learning Required

Rust is harder than JavaScript.

There’s no point in pretending otherwise.

But the result:

- Better performance

- Better security

- Better architecture

- Better long-term maintainability

If you’re building AI-native products, you’re already dealing with complexity. Avoiding Rust pushes that complexity into delicate runtime hacks.

Part 11 – Common Myths

“Tauri is only for small applications.”

False. Many production applications now exceed millions of users.

“WebView UI is slower than Chromium.”

In 2026, native webviews are highly optimized and GPU-accelerated. The bottleneck is almost never the UI layer anymore.

“Electron is easy to secure.”

No. It’s easy to accidentally misconfigure. Tauri’s default-Dini model is inherently safer.

“Rust is very difficult for production teams.”

It’s a skill issue, not a platform issue. Teams learned React. They can learn Rust.

Part 12 – The Path to the Future

Where is this going:

- More on-device prediction

- More encrypted local data

- More hybrid CPU/GPU scheduling

- More multi-modal processing

- More offline-first applications

These trends favor:

- Native performance

- Memory control

- Strong security models

Which means:

- Rust-style backends

- Minimal UI shells

- Permissioned APIs

- OS-level integration

That’s exactly what Tauri is betting on.

Part 13 – Practical Recommendation

If you are:

- Building a new AI-native desktop application in 2026

→ Get started with Tauri 2.0.

If you:

- Maintaining an existing Electron codebase

→ Don’t panic. But plan for evacuation if performance or security is being compromised.

If you are:

- Starting a startup today

→ Don’t lock yourself into the Chromium stuff unless you have a strong reason.

Frequently Asked Questions

Q: Should I rewrite my existing Electron application in Tauri?

A: Not automatically. If your app:

Works well

1) Has no performance complaints

2) Doesn’t run heavy local AI

3) Relies heavily on Node packages

4) Stay on Electron for now.

Rewrite when:

1) RAM usage becomes unacceptable

2) Startup is slow

3) You introduce local inference

4) You need mobile targets

Q: Is Rust mandatory for Tauri?

A: Yes for the backend. The frontend remains standard web tech. You don’t need Rust for the UI – just for the system logic.

Q: Can I still use Python for my AI models?

A: Yes. Run Python as a sidecar. Let Rust handle the process lifecycle and IPC. Clean separation.

Q: How difficult is it to migrate from an electron?

A: Frontend code is often easily ported.

Backend logic must be rewritten from Node to Rust. That’s the real work.

Q: Does Tauri support auto-updates?

A: Yes. Official updater plugin. Works on all platforms.

Q: Is mobile really ready for production?

A: As of 2026: Yes. Multiple shipped applications. Still smaller than desktop but stable.

Q: What about WebGPU or WASM?

A: Tauri supports WebGPU inside WebViews where available. WASM usually runs inside the frontend.

Q: Will electrons disappear?

A: No. Legacy, ecosystem, and inertia keep it alive. But it won’t dominate new AI-native desktop applications.

Q: Can Tauri handle complex multi-window applications?

A: Yes. Multi-window APIs exist. Not as mature as Electron yet, but good enough for most cases.

Q: What is the biggest risk in adopting Tauri?

A: Team Rust learning curve. Solve with training. The technology itself is stable.

Final Verdict

Electron was the right answer for the web-first decade.

Tauri 2.0 is the right answer for the AI-native decade.

Different era. Different constraints. Different priorities.

If you build software that:

- Runs native models

- Handles private data

- Requires native performance

- Ships to desktop and mobile

Then sticking to a browser-in-a-box architecture is nostalgia, not strategy.

The desktop app of the future is:

- Thin UI

- Native Core

- Secure by default

- Efficient by design

And yes – it’s written in Rust.