The “Open Source” Pivot: DeepSeek-V3 vs Llama 3 vs GPT-4o – Is This the Death of Proprietary API Costs?

Compare DeepSeek-V3 vs Llama 3 vs GPT-4o with real cost, API, and open-source insights shaping AI pricing decisions in 2026.

Short answer: No – not yet. But the ground under the old business model has shifted. The combination of rapidly improving open models, low-cost challenges, and increasing scrutiny of ownership chains is forcing customers and vendors to rethink where to spend money. If you’re an engineer, product lead, or CTO who assumed “ownership = always cheap and secure,” this article will make you uncomfortable – and it should. I’ll walk you through the facts, decode the marketing noise, and give you clear, actionable advice on what to do next.

Why this matters now

2024-2026 is the decade when the LLM market stops being an “OpenAI vs. everyone else” story. Multiple events came together:

- Meta shipped Llama 3 and then followed it up with the larger Llama 3.1 family release, clearly positioning “open” models as a viable foundation for production systems. (See Meta’s announcement and release notes.)

- OpenAI continued to iterate on the high-end models (GPT-4o and family) while adjusting the price and product mix – meaning the proprietary API remains powerful but expensive for heavy use. (OpenAI model pages and price lists.)

- New challengers from China (e.g. DeepSeek) pushed aggressive pricing and diverse architectural choices (mixture-of-experts, agent-oriented features), provoking regulatory attention and geopolitical backlash in multiple countries. (Deepseek site, GitHub repo, Reuters coverage.)

What all of this means is one thing: the cost calculation for running advanced LLM-powered products is shifting from “pay for the API and forget it” to a subtle trade-off between license fees, engineering overhead, hardware considerations, data governance, and regulatory risk.

Meet the Players (Short, Real Profiles)

Deepseek-V3 / Deepseek-V3.2

Deepseek emerged from China and aggressively positioned itself with a mix of open-style releases and commercial services. Their V3 family claims to use a Mixture-of-Experts (MoE) architecture and multi-token prediction strategies to reduce inference cost per token while scaling capacity. DeepSeek V3.x announces accessible web/app/API access to models and agent-style reasoning features. That rapid public pressure also led to regulatory scrutiny and, in some countries, restrictions on government use or app store removal.

Llama 3 (Meta)

Meta introduced Llama 3 (and later Llama 3.1) as a set of pre-trained and instruction-tuned models intended to be “the most capable openly available foundation model”, with the aim of hugging faces and multiple parameter sizes and community licensing via Meta’s site. Meta’s strategy was clear: make a high-quality base model widely available so that researchers, startups, and large enterprises can build, fine-tune, and host it on their own infrastructure.

GPT-4o (OpenAI)

OpenAI’s GPT-4o family represents the commercial, proprietary side: powerful, easy to integrate via API, and priced per token (with multiple tiers such as GPT-4o, GPT-4o-mini, and realtime variants). OpenAI still leads in product polish, ecosystem integration and scale offerings like recovery, fine-tuning, and multi-model tooling – but it also faces higher direct API costs and increasing scrutiny about the total cost for heavy workloads.

Marketing vs. Engineering Reality

Let’s break this down: Vendors sell capacity and cost estimates that are optimistic. Three quick realities that product people often underappreciate:

- “Free” open models aren’t free. Running Llama 3 in production means purchasing engineers, observability, and redundancy for GPUs, deployment, and ops. You trade off license/usage fees for capital and operating costs – and hidden complexity. That’s why some recent analyses call it the “expensive open-source lie”: change the dollar location.

- Proprietary API reduces engineering friction. Yes, you pay per token, but you also offload prediction hosting, patching, model updates, and some compliance tooling. For small projects or rapid prototyping, that friction reduction is real and often worth the price.

- Geopolitics and regulations add a new value axis. If your data goes through servers in certain countries, or the vendor is subject to export controls, it can make cheap APIs unusable for regulated workloads. DeepSeek’s rapid development attracted government restrictions, store removals, and privacy checks in many countries. Those are not theoretical concerns for the ventures.

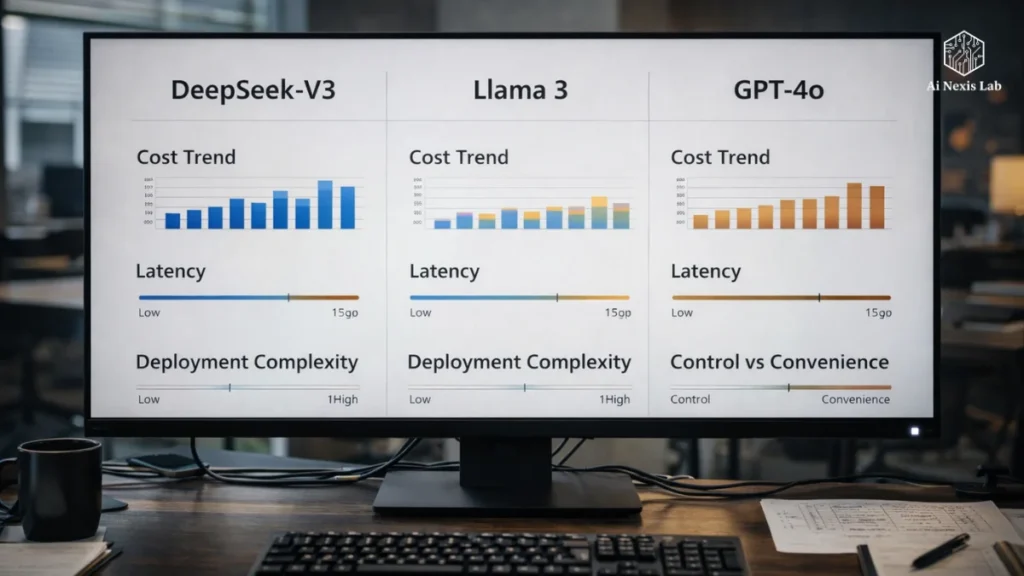

DeepSeek-V3 vs. Llama 3 vs. GPT-4o – A Side-by-Side Reality Check

I’ll break this down into technical and practical properties, and for each, I’ll give the hard measures and what they mean for you.

1) Architecture and Inference Economics

- DeepSeek-V3 (MoE Focus): DeepSeek promotes a mixture-of-experts design where only a subset of parameters are active per token (~671B total claimed with 37B active). MoE can reduce predicted FLOPs per token, improving cost per token if you have the right serving infrastructure – but MoE is difficult to implement efficiently in commodity GPUs, and load-balancing/latency challenges appear at scale. DeepSeek’s GitHub and tech notes discuss these architectural choices.

Bottom line: Potential for wholesale cheap speculation per token, but only if you have optimized serving stacks. - Llama 3 (dense, large variants): Llama 3 and 3.1 are large dense models (multi-size: 8B, 70B, and 405B community release variants also available). Dense models are easy to run on mainstream inference stacks and benefit from existing optimized runtimes (e.g., Triton, FlashAttention, quantized kernels). They are less strange than MoE but more predictable.

- GPT-4o (Proprietary, Optimized): OpenAI runs a tuned infrastructure and invests in engineering to make inferences stronger and faster. You pay for convenience and uptime. From a purely FLIP-THE-SWITCH perspective, this is the cheapest way to get high-quality results quickly – as long as your usage volume doesn’t make the token bill painful.

2) Quality and Capacity (Benchmarks vs. Actual Tasks)

- Benchmarks are important, but actual tasks are more important. Papers and marketing often highlight scores on benchmarks; What you will experience in the product is the illusion rate, instruction adherence, and latency. Both Meta’s Llama 3 family and OpenAI’s GPT-4o have demonstrated strong instruction following; DeepSeek claims to have “logic-first” and agent features that can help with complex multi-step tasks. Independent head-to-head comparisons vary by task and dataset, and the gap between top open and proprietary is narrowing.

Bottom line: Expect all three families to be competitive in many tasks. Proprietary still has a slight edge for “out of the box” polish and tool integration; Open Models Reward Engineering.

3) Price and Total Cost of Ownership (TCO)

- OpenAI (GPT-4o): The price per token is clear. For many real applications, costs scale linearly with usage and can become the dominant line item. OpenAI’s pricing page lists input and output token prices for GPT-4o and variants – useful when modeling projections.

- Llama 3: There are no API fees from Meta for the model (subject to license terms), but you will have to account for GPU hours, storage, networking, and staff. Recent industry reports show that open-source stacks can be significantly cheaper on a token basis if you have the volume and the teams to manage it – but the savings only become apparent after crossing a fairly large threshold.

- DeepSeek: Their public push and low-cost claims have disrupted local markets – but the actual TCO depends on where you host, legal restrictions, and whether or not you accept vendor-hosted inference on Chinese servers. DeepSec made rapid market entry but then faced regulatory pressure in many countries, which is a non-trivial operational risk.

Concrete example (hypothetical): If your application uses 1B tokens/month in-out, the proprietary API bill could be thousands to millions of USD/month depending on the model; Hosting Llama 3 on your own GPU can reduce per-token inference costs but adds hardware amortization, ops salaries, and SRE overhead. Many industry posts estimate that open-source TCO is 5-10× cheaper per token at scale, but that assumes efficient quantization, batching, and hot pools – not trivial.

4) Privacy, Governance and Regulatory Risk

- This is the silent killer of “cheap” choices. The rapid adoption of DeepSeek in 2025–2026 led many governments to restrict or ban it in public systems due to data locality and privacy concerns. It is a real operational hurdle for banks, governments and regulated industries. Both Meta and OpenAI are subject to Western regulatory frameworks and enterprise agreements that provide strong compliance controls for certain customers.

Bottom line: If you’re managing regulatory data, “cheap” and “foreign-hosted” are not interchangeable.

So — does open source kill proprietary API prices?

Again the short, provocative answer: not dead, but the death knell can be heard for certain use cases.

Here’s a more useful breakdown of such situations:

Use cases where proprietary APIs still win

- Early product-market fit and experimentation. Math: Ship faster, avoid ops, accept higher per-unit costs.

- Low-volume but high-complexity tasks where latency, multi-modal tool integration, and reliability are critical.

- Companies that value SLAs, contractual compensation, and managed compliance.

Use cases where open models are seriously competitive (or better)

- Very high token volumes (e.g., large-scale chatbots, content generation platforms) where token bills would be too expensive.

- Teams with ops capability and capital to invest in inference infrastructure; Quantization and sparsity techniques make it realistic to run large models economically.

- Organizations that require complete control over models for IP, privacy, or low-latency edge hosting (e.g., on-prem for regulated industries).

Hybrid Play (Most Practical)

- Use proprietary APIs for control plane and low-latency or low-volume features (summarization, tooling, fine-tuning) while offloading bulk generation or embedding lookups in self-hosted open models. This is the playbook that many mid-sized companies are adopting.

Hidden Costs That Destroy the “Open is Always Cheaper” Argument

When people argue that the open model will “destroy API costs,” they ignore several categories of costs that hit the bank account:

- Engineering and Ops – Deployment, autoscaling, model updates, security, observability, and SRE on call. This isn’t an engineer for a week – it’s a long-term team. The middle section “The Costly Open-Source LLM Lie” explains how costs move from licensing to engineering and infrastructure.

- Hardware amortization – purchasing GPUs, networking, and storage. If you buy racks to save money, you assume the risk of use. Cloud Spot Instances reduce costs but increase operational fragility.

- Model maintenance – continuous fine-tuning, data cleaning, and retraining. Proprietary sellers absorb those costs in their fees. With open models, you own them.

- Safety and Compliance – Deceptive controls, content filters, red-team testing, and legal review. Some of these are built into the platform; Otherwise you create them.

- Business continuity and risk — Vendor lock-in versus geopolitical risk. DeepSeek’s recent regulatory troubles are a real demonstration that vendor choice has geopolitical costs.

So yes – open models may be cheaper on a token basis, but real organizations pay for stability, security, and legitimacy. The question is not “Which is cheaper?” but “Which minimizes your combined cash cost, risk and opportunity cost?”

The Rise of DeepSeek and Regulatory Lessons (Short Case Study)

The path of DeepSeek from 2024-2026 is instructive.

- They launched aggressive V2/V3 variants and promoted low-cost access. This led to a huge increase in user growth. (Company release and GitHub.)

- As global adoption grew, so did scrutiny – regulators in multiple countries reviewed or banned use; Italy, France, the Netherlands and others investigated privacy or antitrust aspects. Reuters covered the restrictions and investigations, noting concerns about user data storage and national security.

Lesson: If your “cheap” model routes data to places with regulatory risk, your cost savings can evaporate overnight due to restrictions, compliance costs, or the need to re-engineer. Seller locality is just as important as price.

Engineering Tricks: How to Win the Cost War Without Sacrificing Speed

If you’re serious about reducing API costs but aren’t ready to ditch a proprietary provider, here are practical, no-BS tricks that work in production.

1) Tier responses by cost sensitivity

- Use GPT-4o (or other high-quality API) for early, high-value interactions that set the context (e.g., user intent searches, important summaries).

- Use open models (Llama 3 derivatives, quantized) for bulk, low-risk text generation tasks (e.g., template filling, internal drafts).

This hybrid approach can dramatically reduce token bills.

2) Cache everything wisely

- Store embeddings and response snippets. Use the rules engine to avoid repeated calls where cached output is acceptable.

3) Reduce the context window where possible

- Cut out irrelevant context, compress long histories into concise embeddings or summaries, and call the larger model with only focused prompts.

4) Model distillation and response post-processing

- Distill large models into smaller student models for common tasks. Use lightweight classification for trivial decisions. This reduces calls on larger models.

5) Quantization and batching for self-hosted models

- Use 8-bit / 4-bit quantization and aggressive batching to reduce GPU memory usage and cost. But test carefully – quantization can change behavior, especially for logic functions.

6) Negotiate enterprise agreements

- If you are a large customer, talk to vendors about usage levels, custom pricing, or pre-paid credits. Proprietary vendors still negotiate. If your bill is high, you have leverage.

Business and Strategy Takeaways – Tough, Actionable Advice

I’ll be blunt: If you’re a founder or product lead who thinks “we’ll just move everything to Llama 3 and save money tomorrow,” stop. That’s naive. Instead:

- Map your token economics today. Track token usage by feature. Which features use the most tokens and which ones bring in the most revenue? Target those to reduce costs first.

- Benchmark both sides for your work. Run real-world A/B tests: GPT-4o vs. Llama 3 (quantized + tuned) at your own prompt. Measures bounce rate, throughput, and end-user satisfaction. Don’t trust benchmark numbers from blogs.

- Create a migration plan with measurable milestones. Start with non-critical, high-volume workloads. If the goal is to save $X/month, calculate the payback period for hardware and SRE.

- Consider geopolitical risk as a line item. If the cheapest vendor routes data through a risky jurisdiction, budget for the cost of potential sanctions.

- Staff for continuous model ops. If you go open, you will need model ops, data ops, and infra engineers. Hire or contract accordingly. Being understaffed in this area leads to outages and costly errors.

- Mix and match wildly. Use proprietary APIs where they save time and offer legal protection; self-host where scale and control are important.

What vendors are likely to do next (educated bets)

- OpenAI will continue to add tiered pricing, offering cheaper mini variants and realtime options while keeping flagship performance behind higher prices. They will focus on defensive moves (improved security, enterprise agreements) to keep large customers.

- Meta will continue to support the llama family and likely push for broader ecosystem adoption. Would expect better tooling around deployment and partnerships to make hosting Llama easier.

- DeepSeek and Chinese officials will continue aggressive domestic competition and export their models wherever possible; But regulatory pressures will shape how and where they can operate. DeepSeek’s regulatory difficulties in multiple countries are recent evidence.

- Cloud and infra vendors will create managed services for open models (e.g., fully managed Llama hosting) to capture the gap between DIY hosting and proprietary APIs – which basically repackages the convenience of an API with lower long-term costs. Expect more managed offerings and “prediction as a service,” especially for open models.

A Few Myths, Quickly Debunked

Myth 1: Open source models are inherently less capable.

Debunked: The capacity gap has narrowed significantly. Community fine-tuning, instruction tuning, and assembling techniques make open models competitive in many tasks. The edge is now in integration and tooling, not in raw capacity.

Myth 2: Proprietary APIs are always more secure.

Debunked: Security is multi-dimensional. Proprietary vendors may provide enterprise agreements and compliance features, but you also have to trust their data handling. Self-hosting offers more control but requires more work to reach the same level. The DeepSeek example shows that vendor origin is important for national security and data governance.

Myth 3: You have to choose one or the other.

Debunked: Hybrid use is the practical default for most companies. Use the right tool for the right job, and re-evaluate as your usage and technical maturity evolves.

Final Verdict — Practical Roadmap (for CTOs and Product Leads)

I’ll give you a solid, step-by-step plan. No mistakes.

1. Inventory: Measure today’s token usage by feature over the last 90 days. Identify the top 10% of features that use 90% of tokens.

2. Experiment: For top token customers, run a controlled trial:

- Replace API calls in a Canary environment with the Llama 3 variant (quantized, tuned).

- Latency, sloppyness, and user satisfaction measurements.

- Compare estimated infra + ops costs versus direct token costs.

3. Hybrid Deployment: Implement Routing Layer:

- Critical/Low-Latency/High-Quality → Proprietary API (e.g., GPT-4o).

- High-volume, low-risk → self-hosted Llama 3 or equivalent.

- Monitor and switch dynamically based on load and SLO.

4. Optimize prompts and context: Aggressively compress and cache. Invest a few engineer-weeks to create a reference summary for chat history. This dramatically reduces token burn.

5. Negotiation: If your API bill is large, talk to the providers. Volume discounts exist and may change your calculations.

6. Legal and compliance checks: For any non-US or sensitive data, verify vendor hosting locations and regulatory compliance. DeepSeek’s international verification is a cautionary tale; don’t assume cheap = approved.

7. Reassess quarterly: The competitive landscape moves quickly. Re-benchmark every quarter and be prepared to change workloads as vendors change pricing, capacity, or compliance status.

Conclusion – The Human Truth About Cost and Control

Here’s the clear conclusion: “The Death of Proprietary API Cost” is not a single event – it’s a process.

- For some organizations (large volume shops with engineering muscle), open models will significantly reduce marginal costs and win.

- For others (startups, regulated businesses, and teams that value time to market), proprietary APIs will remain the right choice due to their convenience, SLAs, and downstream benefits.

- For most people, the winning approach is a hybrid: treat models like infrastructure components and decide on a case-by-case basis.

We live in a world where choice is the new commodity. That’s great – but it also means you need to think more about tradeoffs, model governance, and the economics of sustainability. The cheapest option on day one can become the most expensive when compliance, outages, or geopolitical risk come up.

Frequently Asked Questions (FAQ)

Q: Is open-source AI really reducing proprietary API costs?

A: No. It’s putting pressure on them – not killing them. Open models like Llama 3 reduce marginal cost of scale, but they introduce infrastructure, operations, and compliance costs. Proprietary APIs still win on speed, reliability, and low engineering overhead. The shift is from exclusive reliance to selective use, not to complete replacement.

Q: Which is cheaper overall: self-hosting Llama 3 or using GPT-4o?

A: It depends not on ideology, but on volume and maturity.

1) Low to medium usage → GPT-4o is generally cheaper considering zero infra and ops.

2) Very high usage (hundreds of millions to billions of tokens/month) → Self-hosting Llama 3 can only be affordable if you already have GPU infrastructure and experienced ML/infra engineers.

If you don’t have one, your “savings” disappear quickly.

Q: Is DeepSeek-V3 really open source?

A: Not in the way most developers assume. DeepSeek openly publishes weights and research, but:

1) Hosting is often vendor-controlled

2) Licensing and data handling are opaque

3 Regulatory acceptance varies by country

So consider it an open weight, not completely open and risk-free.

Q: Are open models as good as GPT-4o in real products?

A: For many tasks, yes. For everything, no.

Open models now match proprietary models in:

1) Text generation

2) Summary

3) Classification

4) Internal tools

They still lag behind in:

1) Complex tool orchestration

2) Multimodal reliability

3) Long-context logic at low latency

The gap is smaller than before, but it’s still there.

Q: Why do companies still use expensive proprietary APIs?

A: Because engineering time costs more than tokens.

Proprietary APIs offer:

1) Managed Scaling

2) SLA

3) Rapid Iteration

4) Built-in Security Layers

5) Enterprise Compliance Options

If your team is small or your product is early, paying per token is often cheaper than running GPUs and model ops.

Q: What hidden costs do people ignore with open-source models?

A: Many – and they are not small:

1) GPU purchase or cloud reservation costs

2) Model serving, batching and autoscaling

3) Monitoring, logging and rollback systems

4) Security hardening and compliance audits

5) Ongoing fine-tuning and evaluation

Open models move costs from vendors to your balance sheet.

Q: Is open source the future of AI models?

A: Yes — but not across the entire stack.

Open models will dominate:

1) Foundation

2) Custom fine-tuning

3) Internal and edge deployment

Proprietary platforms will dominate:

1) Orchestration

2) Tool ecosystems

3) Compliance-heavy environments

The future is neither open nor closed – it’s modular.

Q: What is the smartest AI spending strategy in 2026?

A: Stop thinking completely.

The winning strategy is:

1) Accurately measure token usage

2) Optimize prompts and context windows

3) Mix open and proprietary models

4) Reevaluate quarterly as costs and capacity change

How blind loyalty to both parties destroys budgets.