GraphRAG + Long Reference in 2026: Why did vector search finally reach the top?

In 2026, artificial intelligence did not suddenly “break”. She transcended the assumptions we were forcing on her.

For nearly half a decade, retrieval-augmented generation (RAG) followed the same tired formula:

- Split the documents into chunks

- Convert the chunks into vectors

- Run a similarity search

- Fill the top results into a prompt

- Hope the model figures it out

It worked – until it didn’t.

As enterprise data became more interconnected, more longitudinal, and more disorganized, teams ran into the same wall: vector search is good at matching text, terrible at understanding systems.

The changes taking place now are not cosmetic. It is architectural.

GraphRAG connected to long-reference models is not an upgrade – it is a correction.

This article explains why vector-only RAG reached its limits, how GraphRAG + long references actually work in production, and what serious teams are building in 2026 instead of praying for cosine equality to save them.

No hype. No vendor fluff. Just reality.

The Original Promise of Vector Search (and Why It Was Never Enough)

Vector search was attractive because it solved a real problem cheaply.

Embeddings let us capture semantic similarity instead of keyword matching. That was revolutionary in 2021-2023. Suddenly, “vacation policy” and “paid leave rules” were considered relevant.

But here’s the uncomfortable truth:

Semantic similarity is not understanding.

It is pattern recognition at the sentence level. That’s it.

Vector search never knew:

- Why something happened

- How two facts were connected

- Which relationship was more important

- What changed over time

We pretended it happened because the little demo worked.

At scale, it breaks down.

The Core failure: Vector Search is structurally blind

Let’s stop dancing around it. Vector search fails for one fundamental reason:

It treats knowledge as discrete pieces rather than a connected system.

1. The contextual cliff problem

Chunking has always been a hack.

You split the documents into 300-1,000 token blocks not because it was “best”, but because the model couldn’t handle more. This forced the system to destroy the natural context.

Ask a question that covers time, causality, or organizational structure, and vector search falls apart.

Example:

“How did the CEO’s 2022 restructuring decision contribute to the 2024 supply chain collapse?”

The vector system can recover:

- A part about the CEO

- A part about restructuring

- A part about the supply chain

What it won’t reliably achieve is the chain of causality that connects them.

Why?

Because cosine similarity does not encode:

- Temporal dependency

- Organizational hierarchy

- Cause-and-effect

It simply says: These sentences look alike.

That’s not logic. That’s pattern matching.

2. Global questions could never be solved with Vector RAG

Vector RAG basically cannot answer global questions without lying.

Questions like:

- “What are the most common topics in 600 customer complaints?”

- “Summarize cultural issues in five-year internal reviews.”

- “What systemic risks appear frequently in these incident reports?”

Vector retrieval captures the “top-K” chunks and ignores the rest.

That’s not summarization – that’s sampling bias.

If you’ve ever wondered why RAG systems get confused during summaries, this is why. They are summarizing what they saw, not what exists.

People tried to fix this like this:

- Recursive summarization

- Pre-computed document summarization

- Sliding windows

It’s all duct tape.

3. Entity Identity Disaster

Vector systems do not resolve identity. Period.

They don’t know:

- Whether “Alex” in document A is the same as “Alex” in document B

- Whether “Java” refers to an island, a language, or coffee

- Whether “company” refers to the parent company or its subsidiary

Pronouns alone destroy precision.

The engineers quietly accepted this because it seemed “too complicated” to fix.

Turns out, it was more expensive to ignore.

Shift: Meaning resides in relationships, not in texts

By 2025, the best teams stopped asking:

“How can we get better pieces?”

They started asking:

“How do we model knowledge the way humans actually think?”

Humans don’t remember information as floating paragraphs. We remember:

- People

- Events

- Decisions

- Causes

- Consequences

And most importantly: how they connect.

That’s where GraphRAG comes in.

What GraphRAG really is (not the marketing version)

GraphRAG is not “RAG with a graph database”.

Done correctly, it is a knowledge modeling pipeline.

At its core, GraphRAG performs three functions:

- Extracts entities (people, organizations, products, concepts, dates)

- Defines relationships (owns, causes, depends on, is informed by, happened before)

- Stores them as a traversable graph

Instead of searching text, the system reasons on the structure.

If vector search asks:

“Which text looks similar?”

GraphRAG asks:

“What facts are connected, and how?”

That distinction changes everything.

Example: Same question, two architectures

Question:

“How did the CEO’s 2022 strategy influence the 2024 supply chain crisis?”

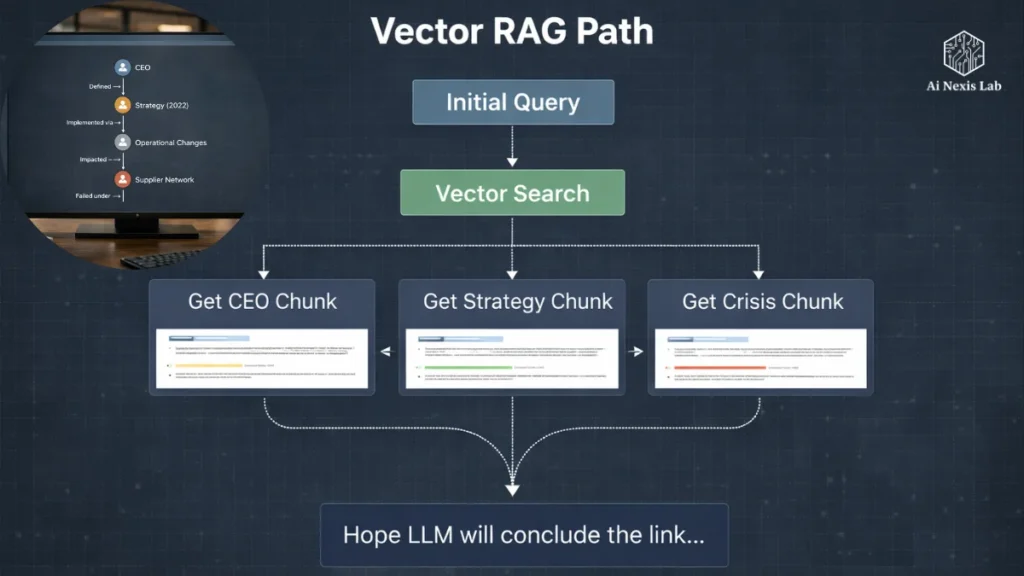

Vector RAG Path

- Get CEO Chunk

- Get Strategy Chunk

- Get Crisis Chunk

- Hope LLM will conclude the link

GraphRAG Path

- Node: CEO

- Edge: Defined → Strategy (2022)

- Edge: → Implemented by Operational Changes

- Edge: Impacted → Supplier Network

- Edge: → Failed under Crisis 2024

Answer not predictable.

It’s walked.

This is the difference between guessing and knowing.

Knowledge Graphs Solved Problems We Pretended Didn’t Exist

Once teams seriously adopted GraphRAG, many “inevitable” problems disappeared.

1. Multi-hop reasoning has become commonplace

Questions like:

- “What risks do vendors indirectly present?”

- “What policy changes impacted employee attrition after two years?”

- “How did regulatory shifts contribute to the decline in revenue?”

These are graph traversal problems, not search problems.

Vector search was never meant to handle them.

2. Entity resolution happens once, not at every prompt

In GraphRAG, entity resolution happens during ingestion, not during generation.

This means that:

- “It” is resolved before the guess

- Obscure names are unobfuscated

- Time-bound identities are preserved

The LLM stops wasting tokens trying to figure out who it is.

Accuracy increases immediately.

3. Traceability stops being a buzzword

One of the biggest enterprise blockers to AI adoption has always been:

“Explain why the model said this.”

Vector systems can’t do that in a meaningful way. They simply say:

“These parts were the same.”

Graphrag Systems can say:

“This answer comes from this chain of relationships.”

It is auditable. It is salvageable.

And regulators love it.

Why Long Context Completely Changed Economics

GraphRAG alone is powerful – but it didn’t fully shine until long-context models became practical.

In 2024, 128k tokens seemed huge. It wasn’t. It just seemed big compared to what it was before.

In 2026, models from providers like Google (Gemini family) and others routinely handle 1-10 million tokens:

- Intelligent caching

- Recovery-aware attention

- Dramatically lower cost per token

That completely changed the trade-offs.

The old limitation: “We must participate”

Chunking was not best practice.

It was a solution to the limitation.

Now, that limitation has largely been removed.

Instead of feeding:

- 12 separate snippets

You can feed:

- Full document clusters

- Full conversation history

- Multi-year timeline

The model can really see the whole picture.

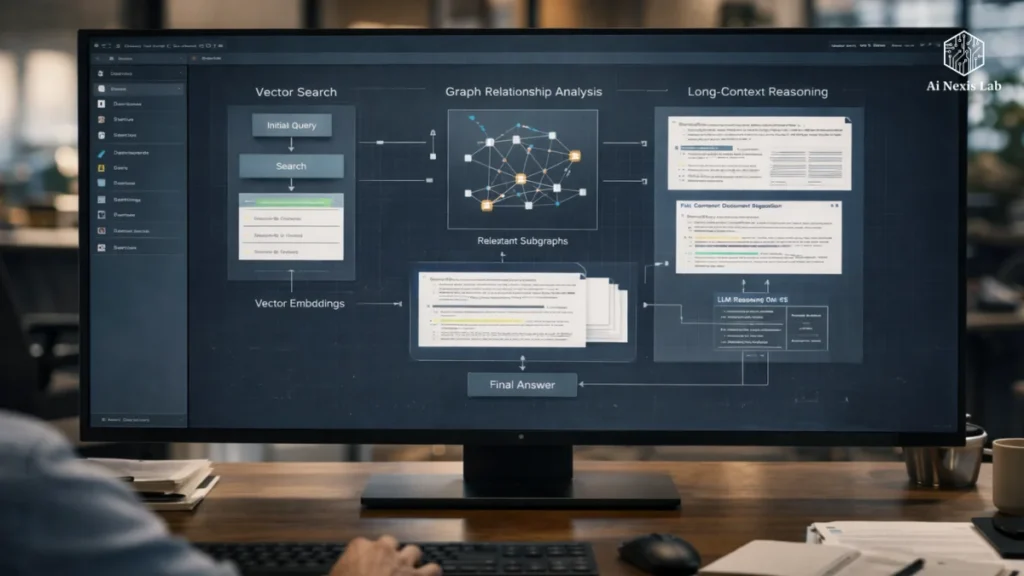

New Pattern: Map First, Read Second

Modern systems follow this flow:

- GraphRAG identifies related subgraphs

- Entire communities of documents are loaded

- Model reasons with full context

Graph answers where to look.

The long context answers what it means.

This separation is important.

2024 vs. 2026: Architectural Reality Check

| Dimension | Vector RAG (2024) | GraphRAG + Long Context (2026) |

|---|---|---|

| Core Logic | Similarity math | Relational reasoning |

| Retrieval Unit | Text chunks | Subgraphs & document communities |

| Reasoning Depth | Single-hop | Multi-hop, temporal |

| Global Queries | Weak / hallucinated | Native capability |

| Debuggability | Opaque | Traceable paths |

| Maintenance Cost | High (chunk tuning) | Front-loaded, stable |

| Enterprise Trust | Low | High |

Vector RAG is not “lost”.

It simply outlived its usefulness as a primary system.

Why This Isn’t Just “Another AI Trend”

Here’s the unsettling part for those clinging to vector-only systems:

This change is not optional.

If your data contains:

- Time

- Hierarchy

- Dependencies

- Causation

- Multiple stakeholders

Then pure vector search will always underperform.

No amount of prompt engineering will fix it.

The belief that “vector search is enough if you tune it”

This idea needs to die.

You can:

- Tune chunk size

- Adjust overlap

- Re-rank results

- Stack retrievers

You are still modeling knowledge as independent pieces.

That’s the wrong abstraction.

Reality: Vector Search Is Still Important (But It’s Not the Brain)

It has simply been demoted.

In serious 2026 systems, vector search is used as:

- A fast, unobtrusive entry point

- A comprehensive signal generator

It then delegates to:

- Graph traversal

- Structured filtering

- Long context logic

Think of vector search as an intern that collects files.

GraphRAG is the analyzer.

LLM is the decision maker.

What Production GraphRAG Pipelines Will Look Like in 2026

A real pipeline looks like this:

1. Ingestion

- Document Analysis

- Extract Entities and Relations

- Identity Resolution

- Build Graphs (often using tools like Neo4j)

2. Indexing

- Alternative Vector Embeddings for Coarse Retrieval

- Community Search

- Temporal Tagging

3. Query Phase

- Intent Classification (Local vs. Global vs. Causal)

- Graph Traversal to Find Related Subgraphs

- Load Full Documents in Long Context

4. Generation

- Multi-Hop Reasoning

- Source-Grounded Synthesis

- Traceable Output

This is not trivial—but it is stable once built.

The harsh truth: This requires better engineering discipline

Graphragm is not a shortcut.

It forces you to:

- Define schema

- Take care of data quality

- Model relationships explicitly

Teams that refuse to do this end up with brittle systems anyway – just slower and less honest.

The payoff is worth it.

Conclusion: This is not a “vector killer”. It is a moment of maturity.

Vector search did not fail.

We failed by asking it to do something it was never designed to do.

The meaning does not reside solely in the embedding.

It remains:

- Connections

- History

- Structure

- Results

GraphRag is connected to long-reference models that ultimately align AI systems with how real knowledge works.

This is how we move forward:

- Chatbots that sound confident

to - Systems that actually know what they’re talking about

Frequently Asked Questions

Q: Is GraphRAG overkill for small datasets?

A: Yes. If your data fits into a small PDF and you’re answering FAQs, don’t worry. GraphRAG shines when relationships matter.

Q: Do I need a graph database?

A: Not strictly, but using one (like Neo4j) makes traversal, visualization, and debugging much easier.

Q: Are long-reference models expensive?

A: Not anymore. With caching and selective loading, the cost is often less than over-fetching the chunk repeatedly.

Q: Does this eliminate hallucinations?

A: No system does this perfectly – but GraphRAG dramatically reduces the structural illusion caused by missing context.

Q: Can I connect this to existing vector pipelines?

A: Yes. Most production systems do exactly the same thing.

Q: How long does implementation take?

A: For a serious enterprise setup: weeks to months. Anyone claiming “one-day graphRAG” is lying.

Q: What is the biggest mistake teams make?

A: Treating GraphRAG as a plug-in rather than an architectural shift.